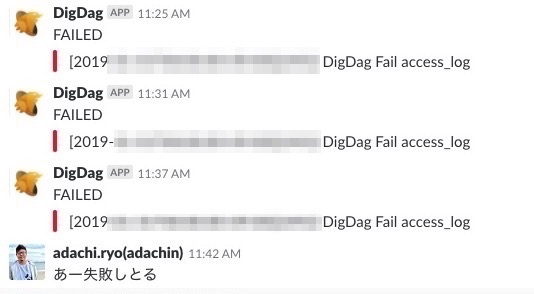

Digdagを運用していると以下のようにエラーが複数出た。

何回も手動でdigdag runしてもエラーが出てしまう。

今回、道なるエラーをどのように調査して、何万行もあるログから破損している行を特定し、修正したのか流れをブログしていきたいと思います。

■Environment

- Digdag/embulk

- s3にアクセスログをBigQueryへ定期的に同期

■Research

(例)日にちは12/31のみにしています

- 12/31のみエラーが出ているので以下のように手動でDigdagを実行をしたがうまくいかない

- 他の日にちでDigdag手動実行をするとうまくいく

- となると、ファイル欠損と予想

- s3にあるgzファイルは以下

- nginx_access_20181231.log_0

- nginx_access_20181231.log_1

・access_log.20181231.dig

|

1 2 3 4 5 6 7 |

_export: s3_target_date: 2018-12-31 bq_target_date: 20181231 _error: sh>: export $(cat config/env | xargs) && /xxxx/digdag/post_slack.sh "[${session_time}][${session_id}] DigDag Fail access_log" +load: sh>: export $(cat config/env | xargs) && /usr/local/bin/embulk run -b $EMBULK_BUNDLE_PATH embulk/access_log.yml.liquid |

・digdag run access_log.20181231.dig

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 |

$ digdag run access_log.20181231.dig 2019-01-02 13:01:03 +0900: Digdag v0.x.xxx 2019-01-02 13:01:06 +0900 [WARN] (main): Reusing the last session time 2019-01-02T00:00:00+00:00. 2019-01-02 13:01:06 +0900 [INFO] (main): Using session /xxxxx/digdag/xxxxx/.digdag/status/20190102T000000+0000. 2019-01-02 13:01:06 +0900 [INFO] (main): Starting a new session project id=1 workflow name=access_log.20181231 session_time=2019-01-02T00:00:00+00:00 2019-01-02 13:01:09 +0900 [INFO] (0016@[0:default]+access_log.20181231+load): sh>: export $(cat config/env | xargs) && /usr/local/bin/embulk run -b $EMBULK_BUNDLE_PATH embulk/access_log.yml.liquid 2019-01-02 13:01:10.088 +0900: Embulk v0.x.xx 2019-01-02 13:01:24.834 +0900 [INFO] (0001:transaction): Loaded plugin ~省略~ 2019-01-02 13:01:35.014 +0900 [INFO] (0001:transaction): Using local thread executor with max_threads=4 / tasks=2 2019-01-02 13:01:35.408 +0900 [INFO] (0001:transaction): embulk-output-bigquery: Create dataset... xxxxxxx:access_log 2019-01-02 13:01:39.627 +0900 [INFO] (0001:transaction): embulk-output-bigquery: Create table... xxxxxxx:access_log.LOAD_TEMP_93d3dad2_c87e_4ac8_901c_1e585b8b608f_access_log_20181231 2019-01-02 13:01:41.208 +0900 [INFO] (0001:transaction): {done: 0 / 2, running: 0} 2019-01-02 13:01:42.280 +0900 [INFO] (0020:task-0001): embulk-output-bigquery: create /tmp/embulk_output_bigquery_20190102-17919-1d3w1wm.17919.2000.jsonl.gz 2019-01-02 13:01:42.293 +0900 [INFO] (0019:task-0000): embulk-output-bigquery: create /tmp/embulk_output_bigquery_20190102-17919-1d3w1wm.17919.2002.jsonl.gz 2019-01-02 13:02:24.300 +0900 [INFO] (0001:transaction): {done: 1 / 2, running: 1} 2019-01-02 13:06:00.332 +0900 [INFO] (0001:transaction): {done: 2 / 2, running: 0} 2019-01-02 13:06:00.335 +0900 [INFO] (0001:transaction): embulk-output-bigquery: Delete table... xxxxx:access_log.LOAD_TEMP_93d3dad2_c87e_4ac8_901c_1e585b8b608f_access_log_20181231 org.embulk.exec.PartialExecutionException: java.lang.NullPointerException at org.embulk.exec.BulkLoader$LoaderState.buildPartialExecuteException(BulkLoader.java:375) at org.embulk.exec.BulkLoader.doRun(BulkLoader.java:607) at org.embulk.exec.BulkLoader.access$000(BulkLoader.java:35) at org.embulk.exec.BulkLoader$1.run(BulkLoader.java:391) at org.embulk.exec.BulkLoader$1.run(BulkLoader.java:387) at org.embulk.spi.Exec.doWith(Exec.java:25) at org.embulk.exec.BulkLoader.run(BulkLoader.java:387) at org.embulk.EmbulkEmbed.run(EmbulkEmbed.java:180) at org.embulk.EmbulkRunner.runInternal(EmbulkRunner.java:337) at org.embulk.EmbulkRunner.run(EmbulkRunner.java:174) at org.embulk.cli.EmbulkRun.runSubcommand(EmbulkRun.java:467) at org.embulk.cli.EmbulkRun.run(EmbulkRun.java:99) at org.embulk.cli.Main.main(Main.java:28) Caused by: java.lang.NullPointerException at org.embulk.filter.row.where.StringOpExp.eval(org/embulk/filter/row/where/ParserExp.java:257) at org.embulk.filter.row.where.LogicalOpExp.eval(org/embulk/filter/row/where/ParserExp.java:369) at org.embulk.filter.row.GuardColumnVisitorWhereImpl.visitColumns(org/embulk/filter/row/GuardColumnVisitorWhereImpl.java:26) at org.embulk.filter.row.RowFilterPlugin$1.add(org/embulk/filter/row/RowFilterPlugin.java:133) at org.embulk.spi.PageBuilder.doFlush(org/embulk/spi/PageBuilder.java:249) at org.embulk.spi.PageBuilder.flush(org/embulk/spi/PageBuilder.java:255) at org.embulk.spi.PageBuilder.addRecord(org/embulk/spi/PageBuilder.java:232) at org.embulk.parser.jsonl.JsonlParserPlugin.run(org/embulk/parser/jsonl/JsonlParserPlugin.java:172) at org.embulk.spi.FileInputRunner.run(org/embulk/spi/FileInputRunner.java:156) at org.embulk.spi.util.Executors.process(org/embulk/spi/util/Executors.java:67) at org.embulk.spi.util.Executors.process(org/embulk/spi/util/Executors.java:42) at org.embulk.exec.LocalExecutorPlugin$DirectExecutor$1.call(org/embulk/exec/LocalExecutorPlugin.java:184) at org.embulk.exec.LocalExecutorPlugin$DirectExecutor$1.call(org/embulk/exec/LocalExecutorPlugin.java:180) at java.util.concurrent.FutureTask.run(java/util/concurrent/FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(java/util/concurrent/ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(java/util/concurrent/ThreadPoolExecutor.java:624) at java.lang.Thread.run(java/lang/Thread.java:748) Error: java.lang.NullPointerException 2019-01-02 13:06:01 +0900 [ERROR] (0016@[0:default]+access_log.20181231+load): Task failed with unexpected error: Command failed with code 1 java.lang.RuntimeException: Command failed with code 1 at io.digdag.standards.operator.ShOperatorFactory$ShOperator.runTask(ShOperatorFactory.java:143) at io.digdag.util.BaseOperator.run(BaseOperator.java:35) at io.digdag.core.agent.OperatorManager.callExecutor(OperatorManager.java:312) at io.digdag.cli.Run$OperatorManagerWithSkip.callExecutor(Run.java:694) at io.digdag.core.agent.OperatorManager.runWithWorkspace(OperatorManager.java:254) at io.digdag.core.agent.OperatorManager.lambda$runWithHeartbeat$2(OperatorManager.java:137) at io.digdag.core.agent.LocalWorkspaceManager.withExtractedArchive(LocalWorkspaceManager.java:25) at io.digdag.core.agent.OperatorManager.runWithHeartbeat(OperatorManager.java:135) at io.digdag.core.agent.OperatorManager.run(OperatorManager.java:119) at io.digdag.cli.Run$OperatorManagerWithSkip.run(Run.java:676) at io.digdag.core.agent.MultiThreadAgent.lambda$null$0(MultiThreadAgent.java:127) at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) at java.util.concurrent.FutureTask.run(FutureTask.java:266) at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) at java.lang.Thread.run(Thread.java:748) 2019-01-02 13:06:01 +0900 [WARN] (0016@[0:default]+access_log.20181231^failure-alert): Skipped 2019-01-02 13:06:02 +0900 [WARN] (0016@[0:default]+access_log.20181231^error): Skipped error: * +access_log.20181231+load: Command failed with code 1 (runtime) |

Error: java.lang.NullPointerException

■Try delete nginx_access_20181231.log_1

nginx_access_20181231.log_1を削除してnginx_access_20181231.log_0のみをdigdag runすると!

|

1 |

Success. Task state is saved at /xxxxx/digdag/xxxxx/.digdag/status/20190102T000000+0000 directory. |

BigQueryに同期できた!?

となると、ファイル欠損しているのはnginx_access_20181231.log_1となるので、

10000行までファイルを分割して、digdag runできるか確認する。

■Try use command split

・10000行までファイルを分割する

|

1 2 3 4 5 6 7 8 9 10 |

$ split -l 10000 nginx_access_20181231.log_1 $ ll total 3814384 -rw-r--r-- 1 xxxxx staff 4688372 1 7 17:31 xaa -rw-r--r-- 1 xxxxx staff 4668351 1 7 17:31 xab ~省略~ $ mkdir file $ mv x* file |

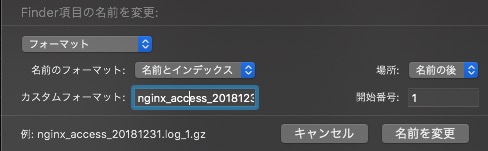

・ファイル名をインデックス順に変更

この170以上あるファイルを半分ずつS3にアップロードしてdigdag runしまくる!

(これはツライ?)

?…..

すると一部だけ欠損を目撃しました?

どうやらカーネルパニックになり、サーバが急に落ちて、ログも死んだのでしょう。

■まとめ

これで道なるエラーもこの流れですばやく対応できるでしょう!!

0件のコメント