CloudWatch Logsを定期的にs3へエクスポートするにはLambdaで可能です。

今回はRDSのgeneral-log,slowquery-logを対象にPython2.7で作ってみました。

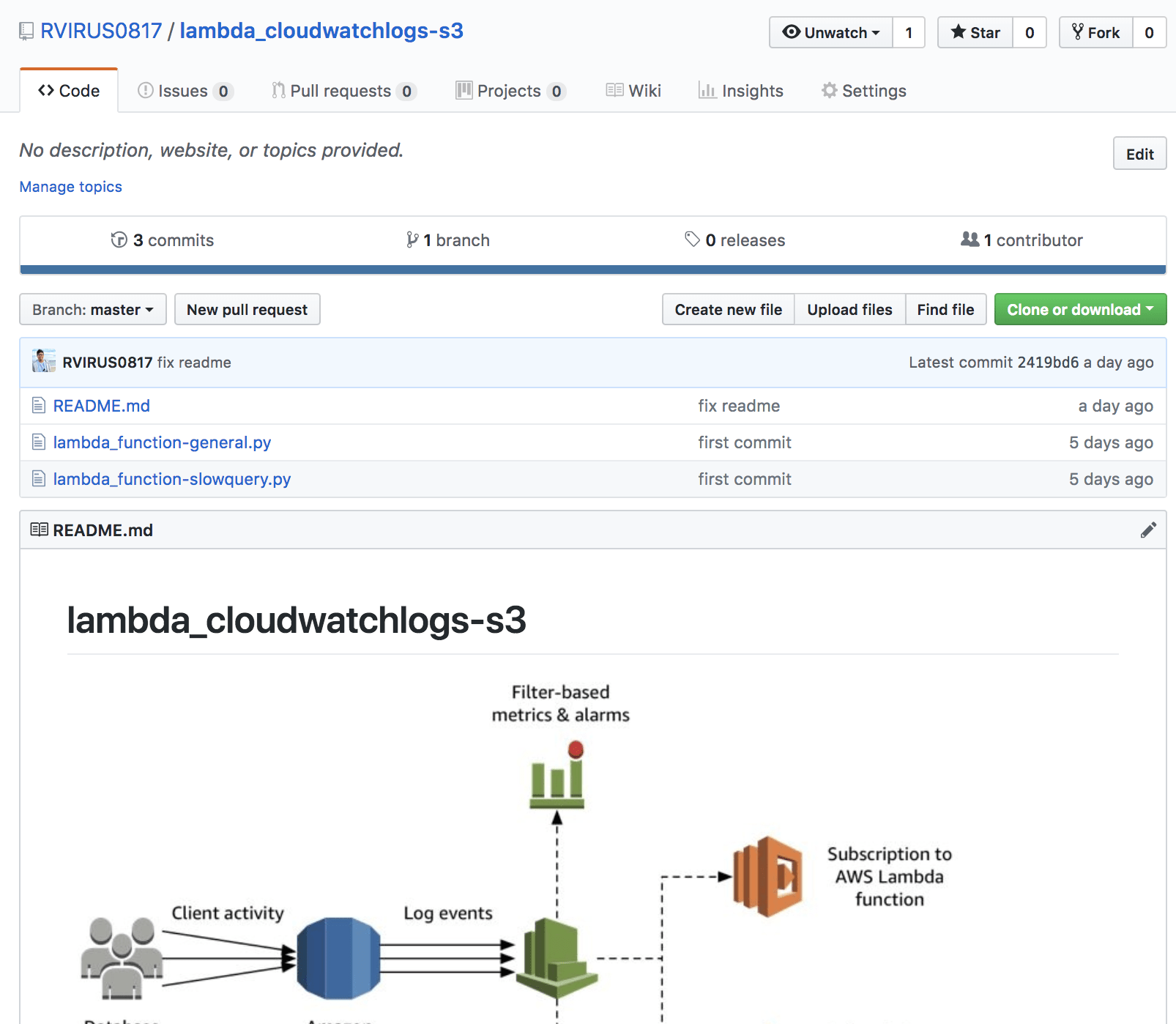

■RVIRUS0817/lambda_cloudwatchlogs-s3

https://github.com/RVIRUS0817/lambda_cloudwatchlogs-s3

■RDS

・ログのエキスポート

- 監査ログ

- エラーログ

- 全般ログ

- スロークエリログ

・パラメータグループ

- slow_query_log →1

- general_log→1

- long_query_time→5

- log_output→FILE

■AWS/roles

- cloudwatchlogs Full Access

■S3 Policy

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "logs.ap-northeast-1.amazonaws.com" }, "Action": "s3:GetBucketAcl", "Resource": "arn:aws:s3:::xxxxxxxxxxxxxxx" }, { "Effect": "Allow", "Principal": { "Service": "xxx.ap-northeast-1.amazonaws.com" }, "Action": "s3:PutObject", "Resource": "arn:aws:s3:::xxxxxxxxxxxxxxxxx/*", "Condition": { "StringEquals": { "s3:x-amz-acl": "bucket-owner-full-control" } } } ] } |

■Lambda

- Python2.7

タイムアウト:1:30

- lambda_function-general.py

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

import datetime import time import boto3 log_name_general = 'general-log' log_group_name_general = '/aws/rds/cluster/xxxxx/general' s3_bucket_name = 'xxxxxxxxxx' s3_prefix_general = 'xxxx/log/mysql/' + log_name_general + '/%s' % (datetime.date.today().strftime("%Y")) + '/%s' % (datetime.date.today().strftime("%m")) + '/%s' % (datetime.date.today().strftime("%d")) def get_from_timestamp(): #now = datetime.date.today() today = datetime.date.today() yesterday = datetime.datetime.combine(today - datetime.timedelta(days = 1), datetime.time(0, 0, 0)) #today = datetime.datetime.combine(now, datetime.time(0, 0, 0)) timestamp = time.mktime(yesterday.timetuple()) #timestamp = time.mktime(now.timetuple()) return int(timestamp) def get_to_timestamp(from_ts): return from_ts + (60 * 60 * 24) - 1 def lambda_handler(event, context): from_ts = get_from_timestamp() to_ts = get_to_timestamp(from_ts) print 'Timestamp: from_ts %s, to_ts %s' % (from_ts, to_ts) client = boto3.client('logs') response = client.create_export_task( logGroupName = log_group_name_general, fromTime = from_ts * 1000, to = to_ts * 1000, destination = s3_bucket_name, destinationPrefix = s3_prefix_general ) return response |

- lambda_function-slowquery.py

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 |

import datetime import time import boto3 log_name_slow = 'slowquery-log' log_group_name_slow = '/aws/rds/cluster/xxxx/slowquery' s3_bucket_name = 'xxxxxxxxxxxxx' s3_prefix_slow = 'xxxxxx/log/mysql/' + log_name_slow + '/%s' % (datetime.date.today().strftime("%Y")) + '/%s' % (datetime.date.today().strftime("%m")) + '/%s' % (datetime.date.today().strftime("%d")) def get_from_timestamp(): #now = datetime.date.today() today = datetime.date.today() yesterday = datetime.datetime.combine(today - datetime.timedelta(days = 1), datetime.time(0, 0, 0)) #today = datetime.datetime.combine(now, datetime.time(0, 0, 0)) timestamp = time.mktime(yesterday.timetuple()) #timestamp = time.mktime(now.timetuple()) return int(timestamp) def get_to_timestamp(from_ts): return from_ts + (60 * 60 * 24) - 1 def lambda_handler(event, context): from_ts = get_from_timestamp() to_ts = get_to_timestamp(from_ts) print 'Timestamp: from_ts %s, to_ts %s' % (from_ts, to_ts) client = boto3.client('logs') response = client.create_export_task( logGroupName = log_group_name_slow, fromTime = from_ts * 1000, to = to_ts * 1000, destination = s3_bucket_name, destinationPrefix = s3_prefix_slow ) return response |

- 日次単位でエクスポートしたいので、前日の 00:00:00 〜 23:59:59 までを指定

- 実行された前日 0 時のタイムスタンプを取得し、86399 秒間 (00:00:00 〜 23:59:59) のログを対象

- Python で計算したタイムスタンプを 1000 倍

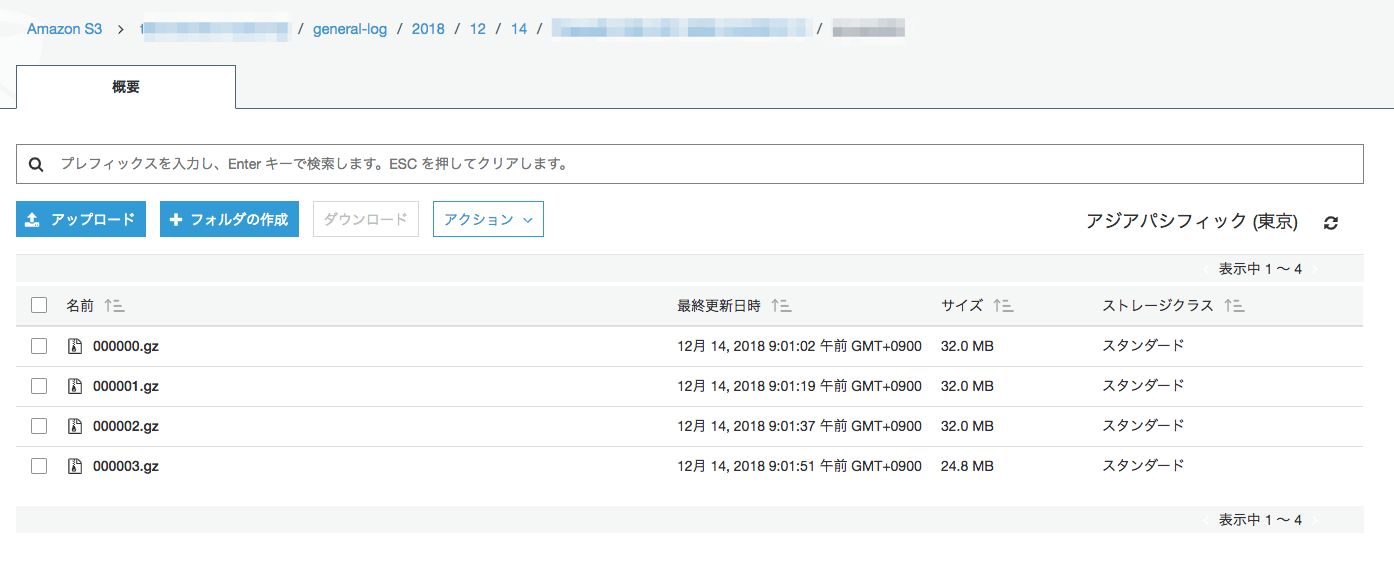

- S3のprefixは年/月/日

■CloudwatchLogs > event

|

1 |

JST: 0 15 * * * * |

Enjoy!!

■まとめ

早く公式で対応するよう待ってます!!

参考:https://blog.manabusakai.com/2016/08/cloudwatch-logs-to-s3/

0件のコメント