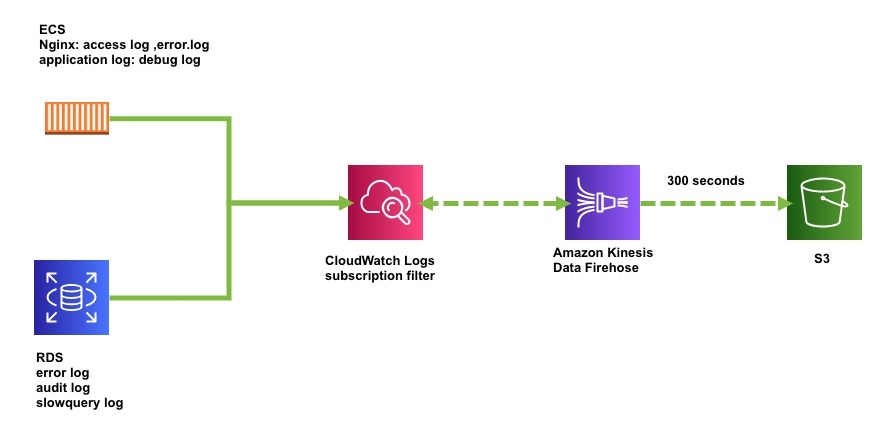

上記のように、今までCloudWatch Logsの各ログらはLambdaを使ってS3に定期的にエクスポートをしていましたが、そもそもPythonのバージョンアップなどやメンテナンスがしにくいということから、今回はECSのログやRDSのスロークエリログ(CloudWatch Logs)をAmazon Kinesis Data Firehoseで同様のことを実装してみました。Terraform化すると非常に便利なので試してみるといいでしょう!

構成

Amazon Kinesis

- S3 bucket

・logs.adachin.com - Prefix

・/hoge/log/hoge-app/!{timestamp:yyyy-MM-dd/}

・/hoge/rds/cluster_hoge/audit/!{timestamp:yyyy-MM-dd/} - Error prefix :

・/hoge-log/hoge-app/result=!{firehose:error-output-type}/!{timestamp:yyyy-MM-dd/}

・/hoge/rds/cluster_hoge/audit/result=!{firehose:error-output-type}/!{timestamp:yyyy-MM-dd/} - Buffer conditions

・10 MiB or 300 seconds - Compression

・GZIP - Encryption

・Disabled

CloudWatch Logs

- subscription filter

- log_group_name

・/ecs/app

・/aws/rds/cluster/hoge/slowquery

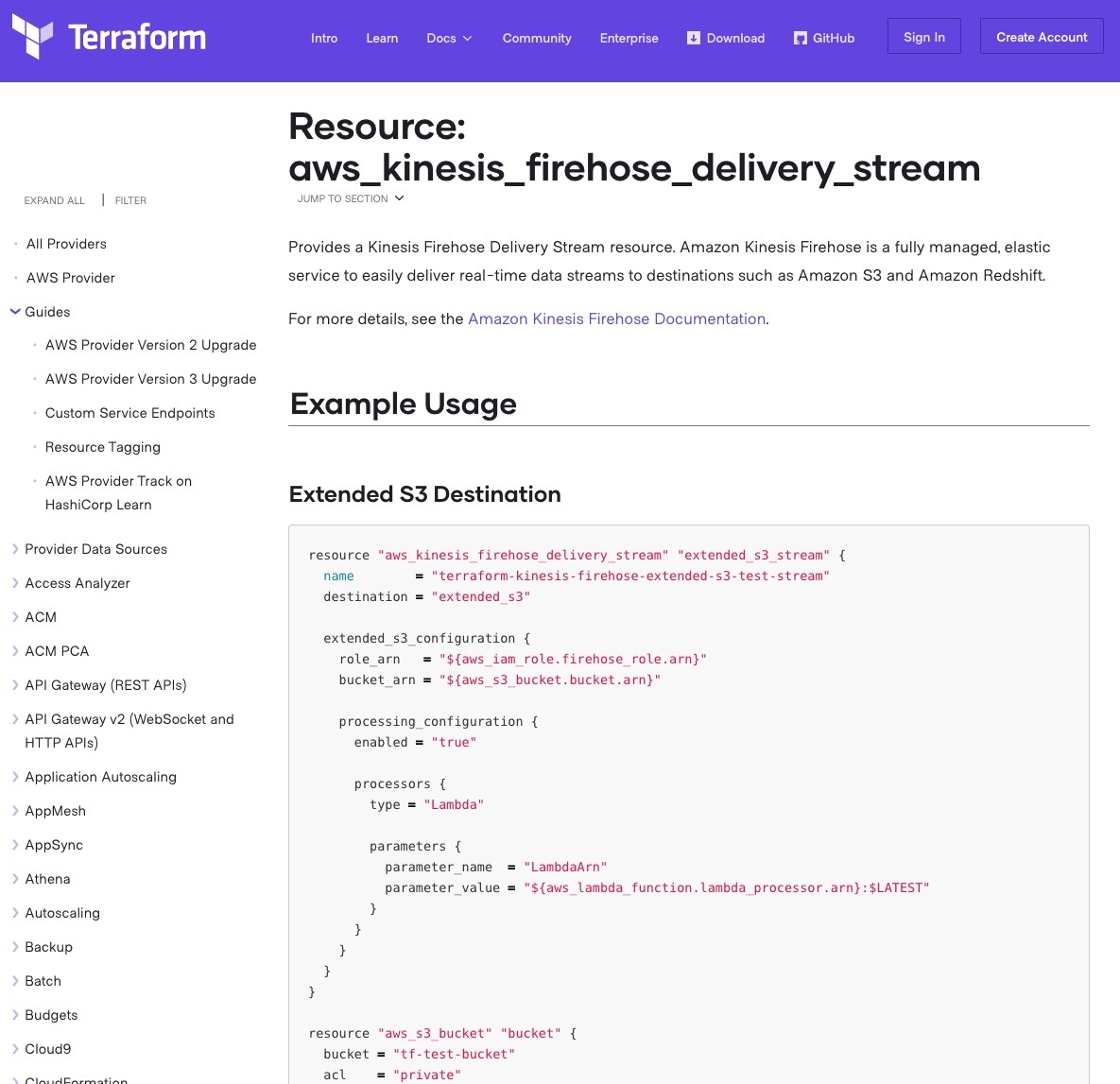

Resource: aws_kinesis_firehose_delivery_stream

https://www.terraform.io/docs/providers/aws/r/kinesis_firehose_delivery_stream.html

Terraform

- iam.tf

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 |

## Kinesis (Firehose_Delivery_Role) resource "aws_iam_role" "firehose_delivery_role" { name = "Firehose_Delivery_Role" assume_role_policy = file("files/assume_role_policy/firehose_delivery_role_policy.json") } resource "aws_iam_policy" "kinesis_access_s3_logs_bucket" { name = "Kinesis_Access_S3_Logs_Bucket" description = "Kinesis_Access_S3_Logs_Bucket" policy = file("files/assume_role_policy/kinesis_access_s3_logs_bucket.json") } resource "aws_iam_role_policy_attachment" "firehose_delivery_role-attach" { role = aws_iam_role.firehose_delivery_role.name policy_arn = aws_iam_policy.kinesis_access_s3_logs_bucket.arn } ## Kinesis (Kinesis_Basic_Role) resource "aws_iam_role" "kinesis_basic_role" { name = "Kinesis_Basic_Role" assume_role_policy = file("files/assume_role_policy/kinesis_basic_role_policy.json") } resource "aws_iam_policy" "kinesis_allow_deliverystreams" { name = "Kinesis_Allow_DeliveryStreams" description = "Kinesis_Allow_DeliveryStreams" policy = file("files/assume_role_policy/kinesis_allow_deliverystreams.json") } resource "aws_iam_role_policy_attachment" "firehose_basic_role-attach" { role = aws_iam_role.kinesis_basic_role.name policy_arn = aws_iam_policy.kinesis_allow_deliverystreams.arn } |

- files/assume_role_policy/firehose_delivery_role_policy.json

|

1 2 3 4 5 6 7 8 9 10 11 12 |

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "firehose.amazonaws.com" }, "Action": "sts:AssumeRole" } ] } |

- files/assume_role_policy/kinesis_access_s3_logs_bucket.json

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "s3:AbortMultipartUpload", "s3:GetBucketLocation", "s3:GetObject", "s3:ListBucket", "s3:ListBucketMultipartUploads", "s3:PutObject" ], "Resource": [ "arn:aws:s3:::logs.adachin.com", "arn:aws:s3:::logs-adachin.com/*" ] } ] } |

- files/assume_role_policy/kinesis_basic_role_policy.json

|

1 2 3 4 5 6 7 8 9 10 11 12 |

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Principal": { "Service": "logs.ap-northeast-1.amazonaws.com" }, "Action": "sts:AssumeRole" } ] } |

- files/assume_role_policy/kinesis_allow_deliverystreams.json

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "firehose:PutRecord", "firehose:PutRecordBatch" ], "Resource": [ "arn:aws:firehose:ap-northeast-1:xxxxxxxxx:deliverystream/ecs_app", "arn:aws:firehose:ap-northeast-1:xxxxxxxxx:deliverystream/rds_slowquery", ] } ] } |

上記の xxxxxxxxxx はAWSのアカウントIDを指定してください。

- variables.tf

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

## CloudWatch Logs Group variable "cloudwatch_logs_group" { default = [ "/ecs/app", "/aws/rds/hoge/audit", ] } ## Kinesis variable "kinesis_s3_cloudwatch_logs_group" { default = [ "hoge/log/hoge-app", "hoge/rds/hoge/audit", ] } ## Kinesis Data Firehose Name variable "kinesis_name_s3" { default = [ "hoge_app", "rds_audit", ] } |

- kinesis.tf

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

resource "aws_cloudwatch_log_group" "kinesis" { name = "/aws/kinesisfirehose/" } resource "aws_kinesis_firehose_delivery_stream" "firehose_s3" { name = element(var.kinesis_name_s3, count.index) destination = "extended_s3" count = length(var.kinesis_s3_cloudwatch_logs_group) extended_s3_configuration { role_arn = aws_iam_role.firehose_delivery_role.arn bucket_arn = "arn:aws:s3:::logs-adachin.com" buffer_size = 3 buffer_interval = 60 prefix = join("", [element(var.kinesis_s3_cloudwatch_logs_group, count.index), "/!{timestamp:yyyy-MM-dd/}"]) error_output_prefix = join("", [element(var.kinesis_s3_cloudwatch_logs_group, count.index), "/result=!{firehose:error-output-type}/!{timestamp:yyyy-MM-dd/}"]) compression_format = "GZIP" compression_format = "GZIP" cloudwatch_logging_options { enabled = true log_group_name = aws_cloudwatch_log_group.kinesis.id log_stream_name = "DestinationDelivery" } } } resource "aws_cloudwatch_log_subscription_filter" "forward_cloudwatchlogs_to_s3" { name = "forward_cloudwatchlogs_to_s3" count = length(var.cloudwatch_logs_group) log_group_name = element(var.cloudwatch_logs_group, count.index) filter_pattern = "" destination_arn = element(aws_kinesis_firehose_delivery_stream.firehose_s3.*.arn, count.index) role_arn = aws_iam_role.kinesis_basic_role.arn distribution = "ByLogStream" } |

join と element により設定ファイルを重複して書くことがないよう、variables.tfで管理するようにしています。

確認

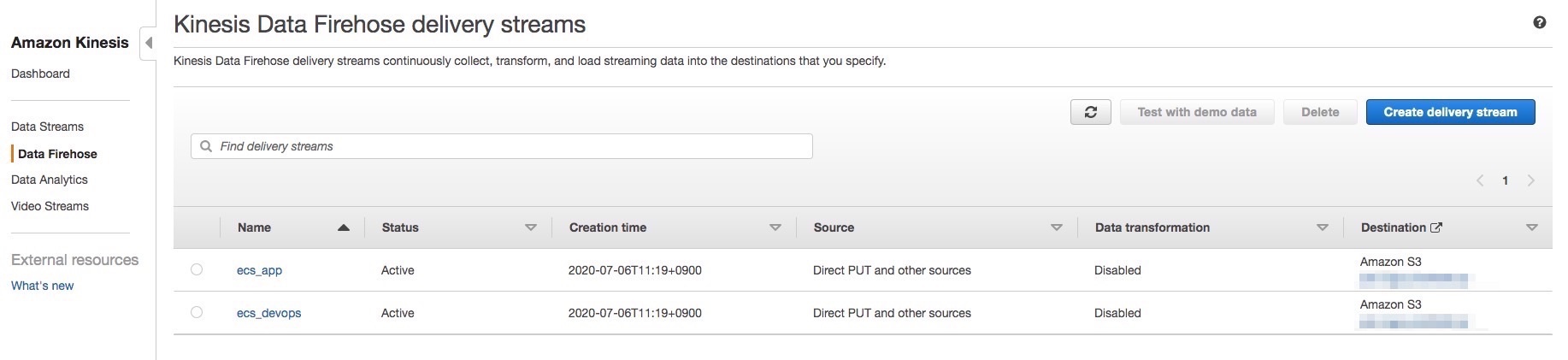

- Kinesis Data Firehose delivery streams

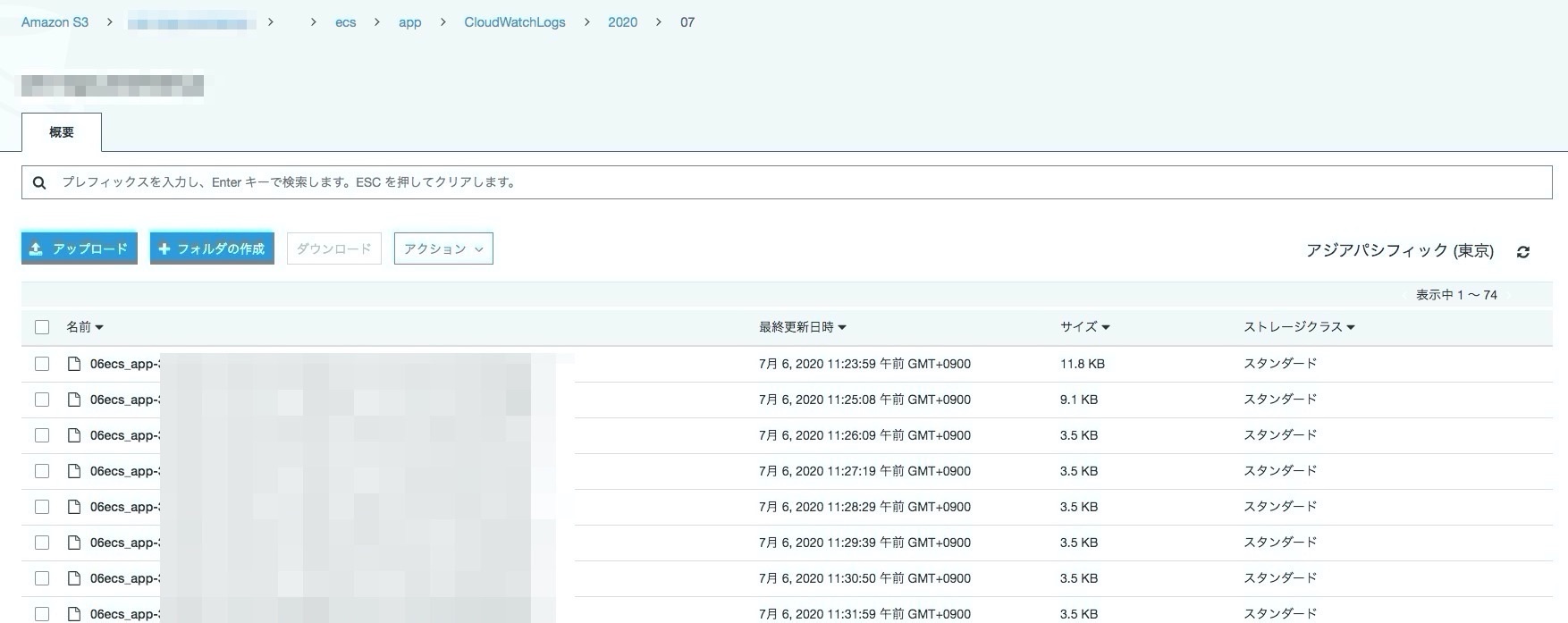

- s3

- Download log

|

1 |

$ gunzip 06ecs_app-3-2020-07-06-02-20-06-xxxxxxxxxxxxx.gz |

まとめ

Lambdaで書く工数がなくなったので、良き!ただ、S3に保存されているファイルはgzで圧縮されているのでファイル名をgzに変えてjqコマンドで確認という工程を忘れそうだな。。

Prefixとサブスクリプションフィルターの形式は適宜変更しましょう。

※以下はログ自体をLTSV形式にする方法なので、生ログで管理したい場合は参考にしてみてください。

[AWS][Terraform]CloudWatch LogsのアクセスログをLambdaでLTSV形式に変換してKinesisでS3に保存する

0件のコメント